Attentional and Perceptual Information Processing

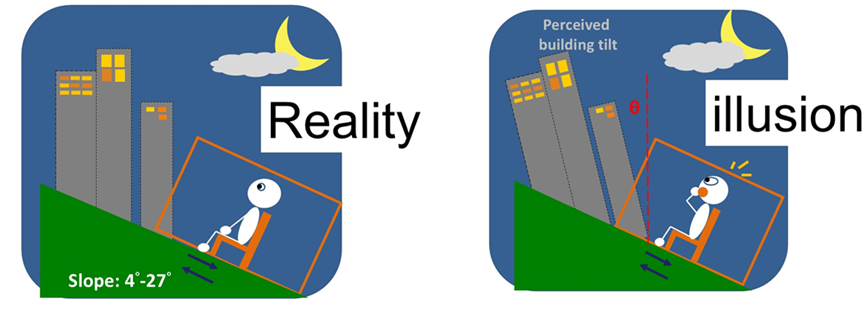

To navigate the daily tasks, our brains have evolved efficient and effective mechanisms to allow us to (1) focus on selected feature and locations, and (2) ignore the unselected information. At the same time, we also use various compensation systems to keep our visual world stable and consistent although we move our eyes and bodies frequently. We study how our attentional and perceptual systems operate with psychophysical method, neurophysiological systems (e.g. SSVEP, EEG, fMRI), and computational modelling.

We used steady-state visual evoked potential (SSVEP) to discover that visual attention can precisely adjust its focus based on spatial size tuning.

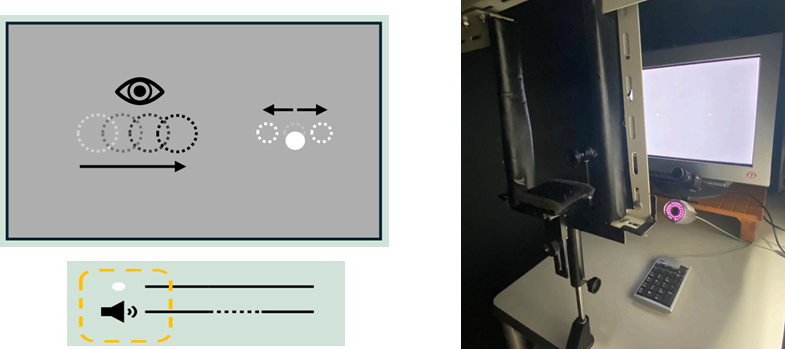

During saccadic eye movements, human observers are insensitive to object displacement, termed Saccadic Suppression of Displacement (SSD). We reported an analogy case in which auditory blanking facilitates the recovery of the saccadic suppression.

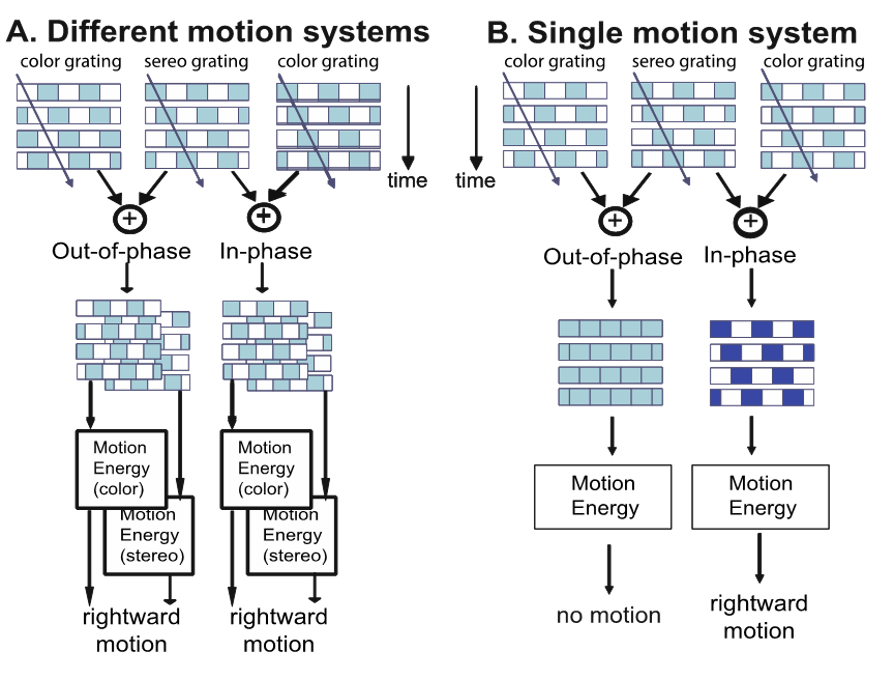

We build conceptual models and designed psychophysical experiments to test these models. The results are used to guide physiological experiment design to understanding underlying neural mechanisms.

Publications

- Chen, G., Hatori, Y., Tseng, C.H., & Shioiri, S. (2025). Adaptive focus: Investigating size tuning in visual attention using SSVEP. Journal of Vision, 25, 6, 1-8.

- Chow, D., Ma, JL., Shioiri, S., Tseng, C.H. (2025). Audio blanking effect of saccadic suppression of target displacement. Vision Science Society Meeting, Florida, USA.

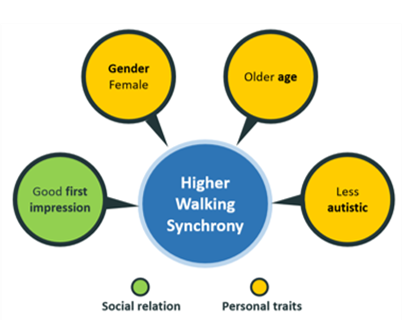

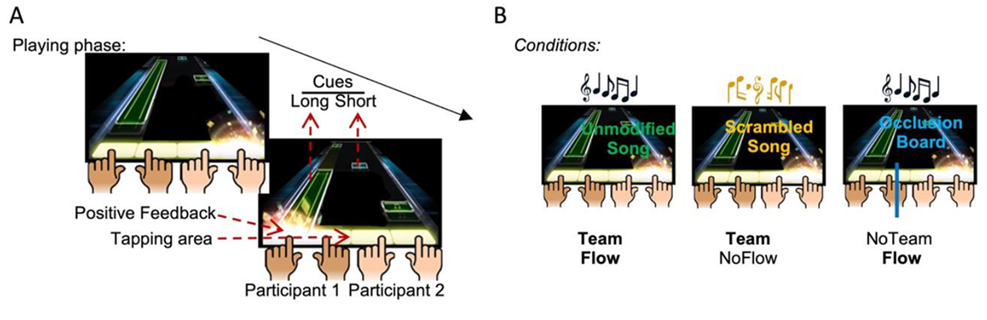

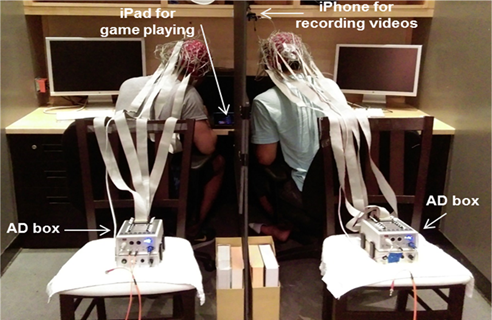

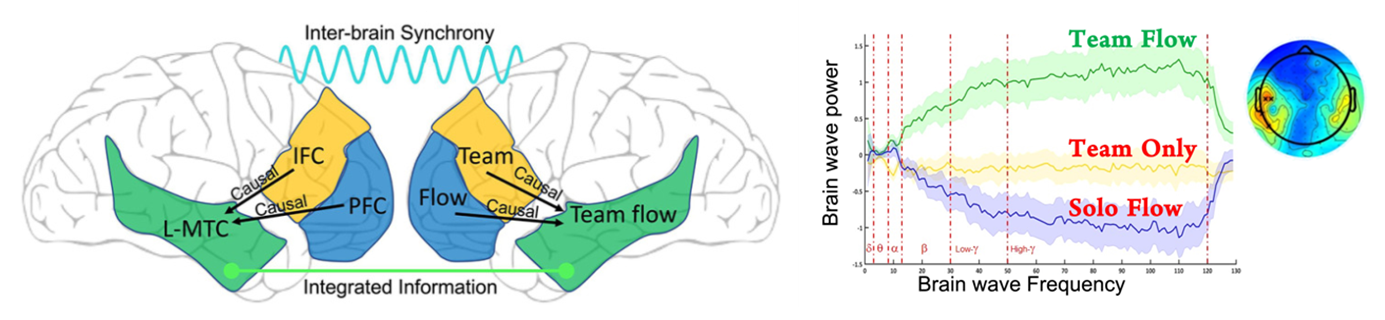

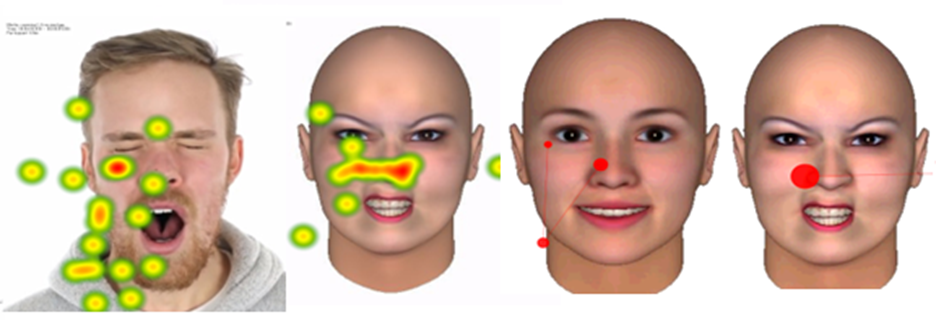

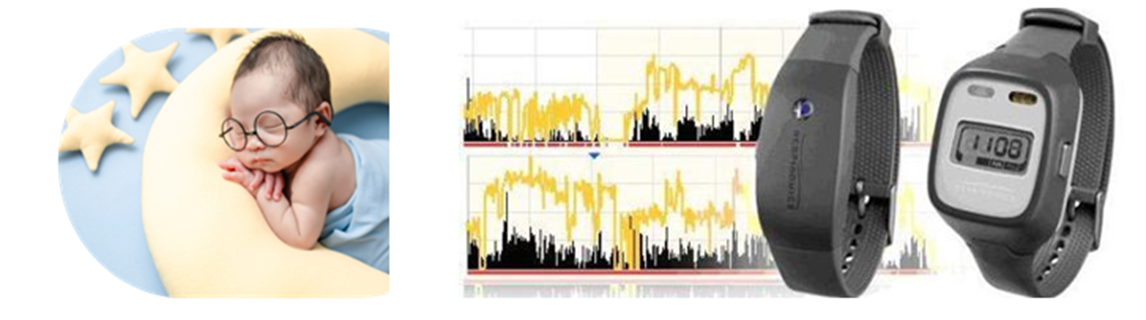

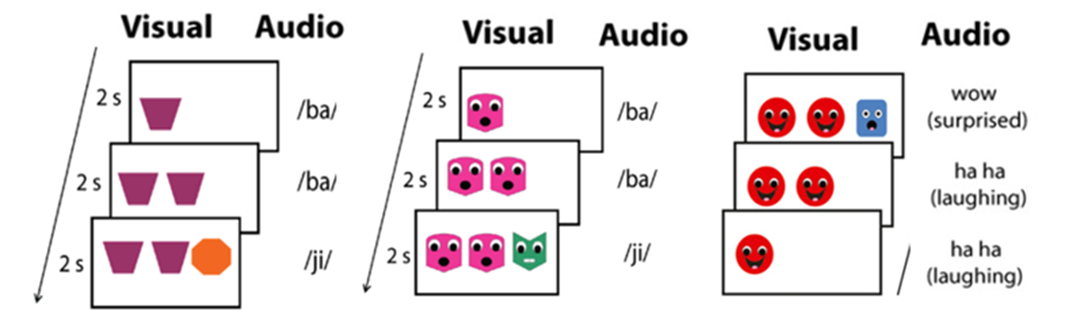

Decoding Individual and Team’s Psychological States from Non-verbal Information

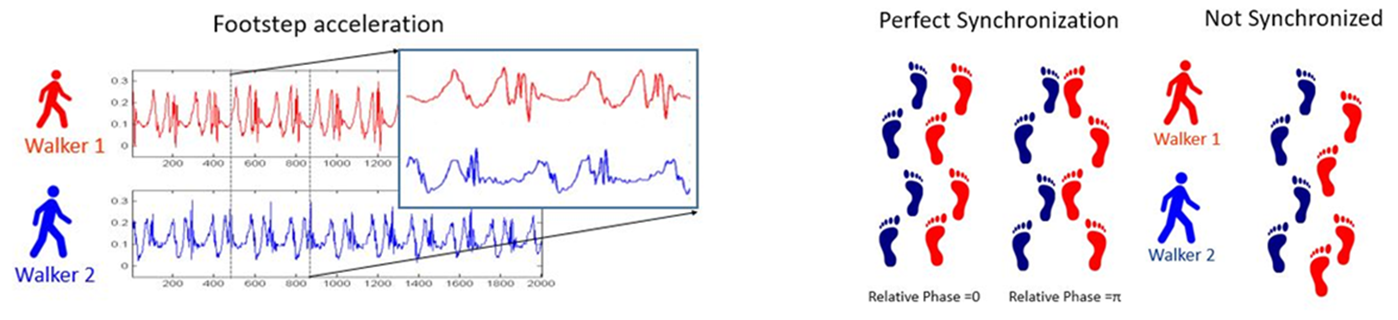

Social communication is vital for our well-being and team productivity. The recent outbreak of COVID-19 has made online sociality a significant and central part of our life. We now use online systems for various kinds of business and leisure activities (e.g. classes, work meetings, social parties, etc). However, for many people, online communication has more barriers and is less satisfactory than in-person communication. One possible reason is that it is harder to feel “together,” i.e. ittaikan (一体感), with other people online, and it is harder to form “oneness” for an online group/team. We combine psychophysical behavioral experiments and machine learning techniques in order to decode the psychological states of individual members (e.g. engaged, help-needed) and the team (e.g. togetherness, co-presence).

We propose to see MA as the tension network which holds the bars together and apart at the same time. The black bars represent different categories of events, temporal moments, spatial location, or subjectivity. MA helps to keep the configuration integrity by balancing the pushes and pulls in this structure, which generates a holistic experience from holding the differences together.

Publications